Inner Vision Pictures

What is Virtual Production?

Virtual production is a filmmaking technique that integrates real-time computer-generated imagery (CGI) with live-action footage during production, using advanced technologies like LED walls, game engines, and motion capture. It allows filmmakers to create and visualise complex digital environments, characters, or effects on set, blending physical and virtual elements seamlessly.

How Virtual Production Works:

Pre-Production Planning:

Filmmakers design digital assets (e.g., environments, characters) using software like Unreal Engine, Unity, or Autodesk Maya.

Storyboards and previsualization (previs) map out scenes, ensuring virtual and physical elements align.

Real-Time Rendering:

Game engines (e.g., Unreal Engine) render high-quality CGI in real time, allowing instant visualization of digital environments or effects.

This replaces traditional green screens, which require post-production compositing.

LED Wall Technology:

Massive LED screens (e.g., ILM’s StageCraft) display dynamic, photorealistic digital backgrounds that react to camera movements via tracking systems.

Actors perform in front of these screens, seeing the virtual environment instead of a green screen, enhancing performances and immersion.

Camera Tracking and Integration:

Cameras are equipped with sensors to sync their movements with the virtual environment, ensuring accurate perspective and parallax effects (e.g., backgrounds shift realistically as the camera moves).

Systems like Unreal Engine’s nDisplay or proprietary tech like Disney’s Volume enable this precision.

Motion Capture:

Actors’ movements or expressions are captured in real time using suits or facial rigs, instantly animating digital characters on the LED wall or in the scene.

On-Set Visualization:

Directors and cinematographers see the combined live-action and CGI footage in real time via monitors, allowing immediate adjustments to lighting, framing, or performances.

Post-Production (Minimal):

Since much of the CGI is finalized on set, post-production focuses on refining details rather than extensive compositing.

Key Technologies and Tools:

Game Engines: Unreal Engine and Unity are industry standards for real-time rendering, offering cinematic-quality visuals with interactive capabilities.

LED Walls: High-resolution screens (e.g., ROE Visual, Planar) create immersive environments with realistic lighting and reflections.

Motion Capture Systems: Tools like Vicon or OptiTrack capture actor movements for digital characters.

Camera Tracking: Systems like Ncam or Stype sync camera movements with virtual scenes for precise alignment.

AR/VR Integration: Augmented reality headsets or virtual scouts help directors preview scenes before shooting.

Applications in Film (Relevant to Montana Mischief):

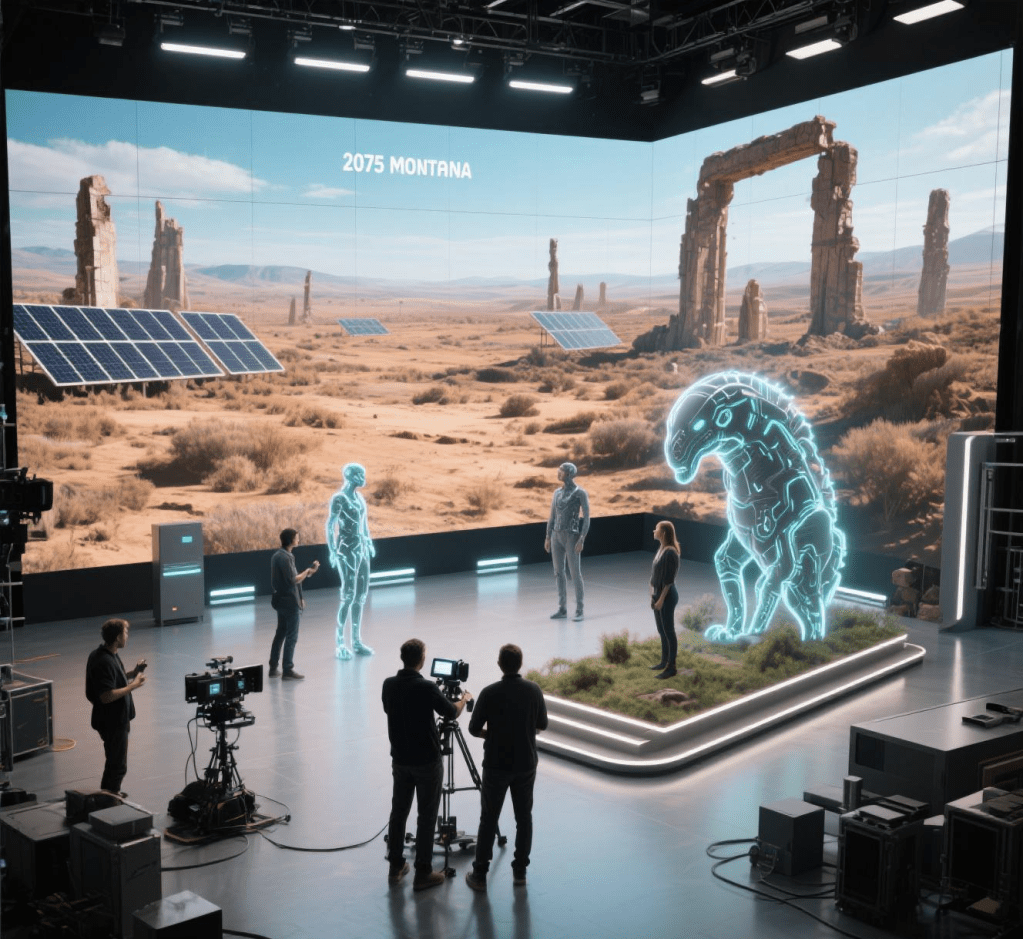

For a futuristic action film like Montana Mischief, virtual production would be transformative:

Dystopian Montana: LED walls could display sprawling cyber-cities or desolate wastelands, immersing actors in a 2075 Montana without costly location shoots.

Dynamic Action Sequences: Real-time CGI enables filming complex battles (e.g., aerial dogfights, cybernetic creatures) with immediate feedback, ensuring precision.

Cost Efficiency: Reduces reliance on extensive post-production by capturing near-final visuals on set, critical for a $150M budget.IMAX Appeal: High-resolution LED walls and real-time rendering ensure visuals are optimized for large-format screens, maximizing audience impact.

IMAX Appeal: High-resolution LED walls and real-time rendering ensure visuals are optimized for large-format screens, maximizing audience impact.

Advantages:

Real-Time Feedback: Directors can adjust scenes on the fly, reducing costly reshoots.

Enhanced Performances: Actors interact with tangible digital environments, improving authenticity over green-screen acting.

Cost and Time Savings: Minimizes post-production by capturing final or near-final visuals during filming.

Flexibility: Virtual sets can be tweaked instantly (e.g., changing lighting or weather), unlike physical sets.

Sustainability: Reduces travel and physical set construction, lowering environmental impact.

Challenges:

High Initial Costs: LED walls, game engines, and tracking systems require significant upfront investment.

Technical Expertise: Demands skilled technicians, VFX artists, and game engine specialists on set.

Limitations: Not ideal for every scene; some effects (e.g., intricate particle simulations) may still require traditional CGI in post-production.

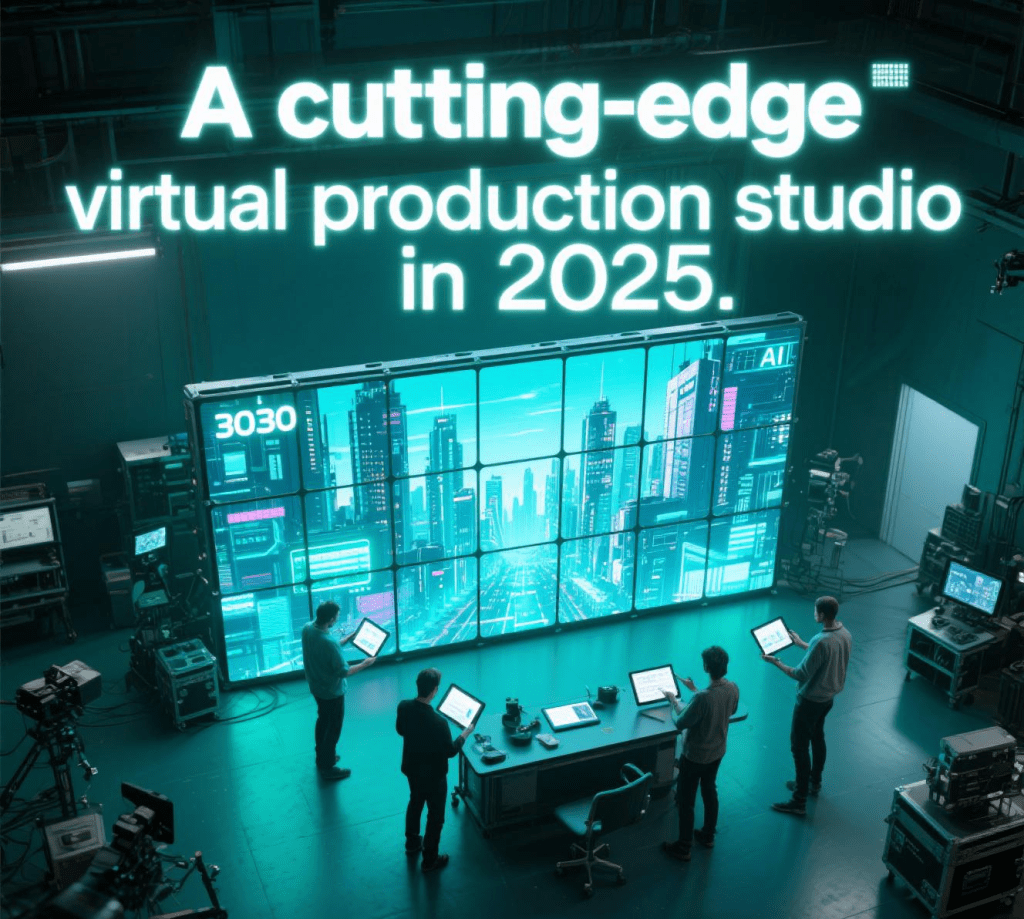

Current Trends (2025):

Widespread Adoption: Popularized by The Mandalorian and The Batman, virtual production is now standard for big-budget films.

AI Integration: AI tools streamline asset creation and optimize real-time rendering, reducing setup time.

Portable LED Stages: Smaller, modular LED walls make virtual production accessible for mid-budget films.

Hybrid Approaches: Combining virtual production with traditional CGI and practical effects for maximum realism, as seen in Avatar: The Way of Water.

Relevance to Montana Mischief:

For a super-large-budget futuristic action film, virtual production could create a visually spectacular 3030 Planet Earth, blending cyberpunk cities and vast wastelands with real-time action sequences. Using LED walls and Unreal Engine, the production could capture IMAX-ready visuals on set, reducing post-production costs and ensuring the film’s blockbuster scale. This approach would also allow the director to fine-tune futuristic elements (e.g., holographic interfaces, robotic enemies) live, enhancing creative control and efficiency.